Cluster

An interconnected computer system or cluster refers to a number of individual computers that are coupled together to form a logical network. A cluster is usually considered to be one contiguous device.

Warnung

The basic settings of the cluster are preconfigured at the factory and should only be changed by the customer service of m-privacy GmbH . Improper changes endanger the operation of the entire cluster.

Configuring the cluster settings requires logging in as administrator config at the console. The submenu Cluster is located in the main menu.

To activate the cluster mode, the menu item Activate TightGate Cluster = Yes must be selected in the cluster menu. Only then does the configuration menu open.

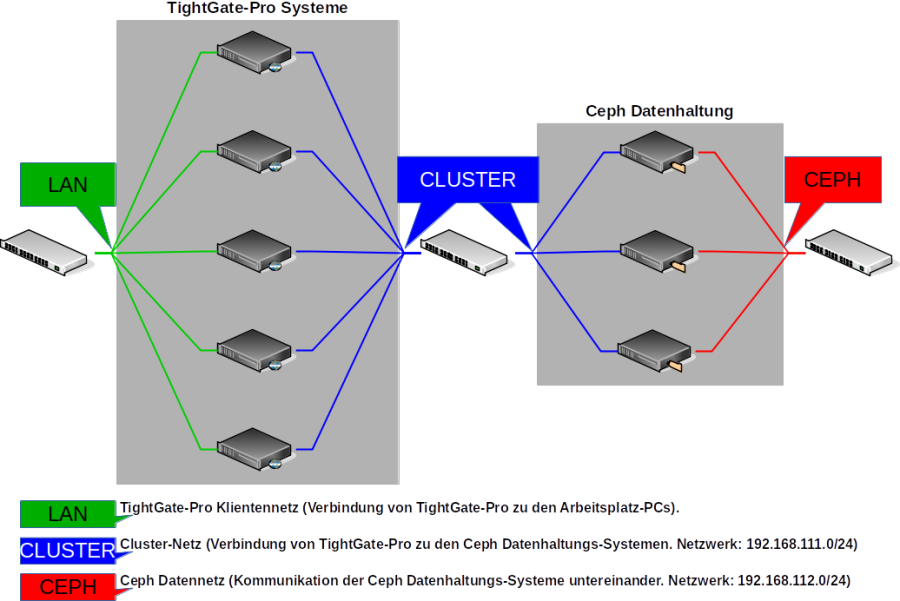

The following diagram illustrates the structure of a TightGate-Pro cluster with Ceph data storage.

The following configuration settings must be made regardless of the type of central data storage:

| Menu item | Description | |

|---|---|---|

| Cluster base name* | Computer name used in the cluster without the appended sequential number. The computer names used in the cluster are generated from this name. | |

| Cluster partner IP network* | Cluster network via which the individual TightGate-Pro (nodes) communicate with the data storage within the cluster. The IPv4 addresses of the nodes in the cluster network must always receive consecutive IPv4 addresses. These are independent of the client network. | |

| Maximum nodes in update(*) | Specifies how many nodes in the cluster may perform updates simultaneously. | Please set m-privacy GmbH in consultation with the technical customer service. |

| Number of sub-clusters* | Number of sub-clusters that are to operate in this federation. If you have only one central location where all users work, enter 1 here. If you have a central location as well as other secondary locations, each with its own TightGate-Pro server, the number for each location must be increased. A maximum of 9 sub-clusters are allowed. | |

| Load balancer on this node | Select whether this node should work as a load balancer for one or more sub-clusters. Make sure that no more than 3 load balancers are working per sub-cluster. |

In the next step, it must be determined how the data storage at TightGate-Pro was realised. The following options are available at TightGate-Pro:

- External Ceph data storage

- Locally virtualised Ceph data storage

External Ceph data storage

If an external Ceph stores the data for the TightGate-Pro cluster, the following configurations must be made:

| Menu item | Setting | Description |

|---|---|---|

| Distributed Ceph data storage* | Yes | Activates the submenu for data storage via Ceph. |

| External/KVM Ceph Cluster* | Yes | Activates distributed data storage via Ceph and opens the further submenu. |

| Ceph Monitor IPs* | 192.168.111.101 192.168.111.102 192.168.111.103 | All IPv4 addresses of the external Ceph monitors. The Ceph network is 192.168.111.101 ff in the default configuration by the m-privacy. In a cluster with 5 Ceph nodes, the monitor IPs must be supplemented by the addresses 192.168.111.104 and 192.168.111.105. |

| Ceph user* | tgpro | The user who has access rights to the Ceph data storage for the home directory and the backup directory. |

| Ceph-Password* | The password for the "tgpro" user. | |

| Ceph-homeuser-subpath* | homeuser | Path to the HOME directory in the Ceph data storage. |

| Ceph-backup subpath* | backup | Path to the BACKUP directory in the Ceph data store. |

| —– | ||

| Shared update cache* | Yes | Enables a shared update cache on the Ceph data store. This allows all TightGate-Pro (nodes) to update directly from there and not have to download updates individually. |

| —– | ||

| Subcluster: XXX* | The submenu is described in detail in the next section. | |

| —– | ||

| Start KVM with Ceph | No | This (Yes) activates the virtual machine for the virtual Ceph and the associated menu is displayed (see below). |

Virtualised Ceph data storage

If an integrated virtual Ceph data storage is in use, the following configurations must be made:

| Menu item | Setting | Description |

|---|---|---|

| Distributed Ceph Data Storage* | No | Disables the submenu for data storage via external Ceph. |

| Distributed Cluster Data Storage* | No | Disables the submenu for data storage. |

| Start KVM with Ceph | Yes | Activates virtual data storage via Ceph and opens the further submenu. |

| KVM Network Bridge | brX | Name of the network bridge used to pass the kvm through. |

| KVM network MAC address | 52:54:01:XXX | MAC address of the KVM network. ATTENTION: Must be different on each node. |

| KVM partition | /dev/xxx | Partition where ceph KVM partition is installed (default /dev/sda7). |

| KVM number of CPUs | 1-9 | Number of CPUs available for ceph-kvm (default 3). |

| KVM memory size in MB | 2048 | Size of the main memory available for the ceph-kvm (default 6172). |

Configuration of a TightGate-Pro partial cluster

| Menu item | Setting | Description |

|---|---|---|

| Partial cluster name * | Freely selectable name for the partial cluster | |

| Hostname base number* | 1 | Number of the first node in the sub-cluster. The number is appended to the first node in the sub-cluster and then incremented. By default, it starts at 1. |

| Base IP* | 192.168.111.x | Base IP of the subcluster network. The IP should not leave the standard and should only differ in the host part of the network 192.168.111.0. For each sub-cluster, there is one base IP to start with and then count up for the number of nodes. Make sure that there are enough IP addresses for the number of nodes in the sub-cluster. Important: It is essential that all nodes in the sub-cluster have consecutive IP addresses. |

| Number of Nodes* | Number of nodes in the sub-cluster. | |

| DNS domain* | DNS domain name of this sub-cluster in the load balancer. This only applies to the name resolution of the TightGate viewers. | |

| Client base IP* | 10.10.10.0 | Client IP address of the first TightGate-Pro in the sub-cluster. Important: It is essential that all nodes in the sub-cluster have consecutive IP addresses. |

| Max. Number of DNS entries* | Maximum number of DNS entries of this subcluster in the load balancer. | |

| Additional DNS entries* | Entry of any additional DNS entries for the subcluster. A reverse resolution is automatically created for each entry. | |

After the settings have been made, they must be saved via the menu option Save. Afterwards, the menu option Apply activates the saved settings.